AI·빅데이터 융합 경영학 Study Note

[ML수업] 8주차 실습6: feature engineering- Feature Generation 본문

## 6. Feature Generation ###

- 그룹별 summary: Ex) state는 고객이 위치한 36개 주를 나타냄 => 주별로 평균 cost를 계산하여 새로운 feature 생성

- 기존 feature 간의 결합: Ex) 1인당 견적을 계산

- 개별 feature 의 함수적 변환: Ex) np.log, np.sqrt, np.square 등을 사용

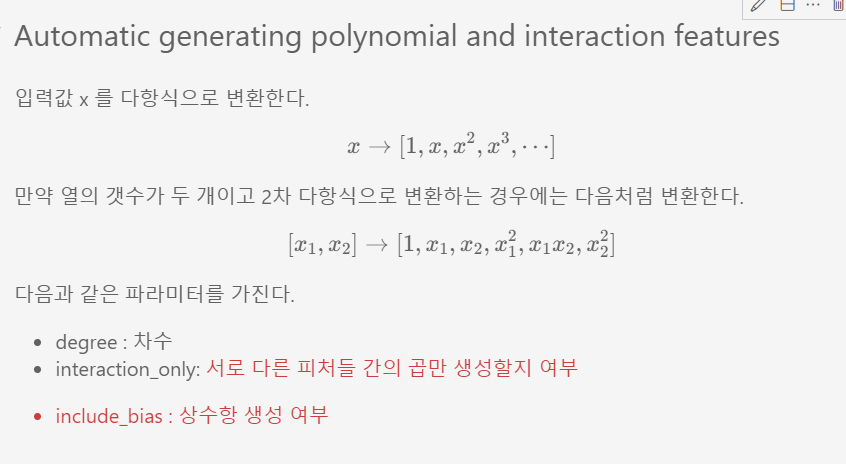

- 상호작용과 다항식 추가: 아래 참

# 이전에 배우고 실행했던 코드

import pandas as pd

import numpy as np

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.preprocessing import PowerTransformer

from sklearn.feature_selection import SelectKBest

cancer = load_breast_cancer()

X_train, X_test, y_train, y_test = train_test_split(cancer.data, cancer.target, random_state=0)

scaler = PowerTransformer(standardize=True)

X_train_sc3 = scaler.fit(X_train).transform(X_train)

X_test_sc3 = scaler.transform(X_test)

svm = SVC(random_state=0)from sklearn.preprocessing import PolynomialFeatures

X = np.arange(1,7).reshape(3, 2); Xpoly = PolynomialFeatures(3)

poly.fit_transform(X)# 코드 추가

df = pd.DataFrame(poly.fit_transform(X)); df.columns=poly.get_feature_names_out()# 코드 변경

poly = PolynomialFeatures(interaction_only=True, include_bias=False)

poly.fit_transform(X)

'''

array([[ 1., 2., 2.],

[ 3., 4., 12.],

[ 5., 6., 30.]])

'''poly.get_feature_names_out()

#array(['x0', 'x1', 'x0 x1'], dtype=object)# 코드 수정

print(X_train_sc3.shape)

poly = PolynomialFeatures(2, include_bias=False)

X_train_sc3_poly = poly.fit_transform(X_train_sc3)

X_test_sc3_poly = poly.transform(X_test_sc3)

print(X_train_sc3_poly.shape, X_test_sc3_poly.shape)

svm.fit(X_train_sc3_poly, y_train).score(X_test_sc3_poly, y_test)

'''

(426, 30)

(426, 495) (143, 495)

0.9370629370629371

'''#### feature generation + feature selection

select2 = SelectKBest(k=20)

X_train_sc3_poly_fs2 = select2.fit(X_train_sc3_poly, y_train).transform(X_train_sc3_poly)

X_test_sc3_poly_fs2 = select2.transform(X_test_sc3_poly)

print(X_train_sc3_poly_fs2.shape)

svm.fit(X_train_sc3_poly_fs2, y_train).score(X_test_sc3_poly_fs2, y_test)

'''

(426, 20)

0.993006993006993

'''mask = select2.get_support()

np.array(poly.get_feature_names_out())[mask]

'''

array(['x0', 'x1', 'x2', 'x3', 'x5', 'x6', 'x7', 'x10', 'x12', 'x13',

'x17', 'x20', 'x21', 'x22', 'x23', 'x24', 'x25', 'x26', 'x27',

'x3 x10'], dtype=object)

'''

(참고)

#### Kaggle Competition에서 자주 사용되는 feature 생성방법(아래 pdf 참조)

<font color='black'><p>

- https://drive.google.com/open?id=1HDZc1mDvtmpjg9YPpUN0koHeAiqTAHRw

'AI·ML' 카테고리의 다른 글

| [ML수업] 10주차 실습2: Hyperparameter Tuning using Pipeline+Optuna 예시 코드 (0) | 2023.11.21 |

|---|---|

| [ML수업] 10주차 실습1: pipeline_basics (0) | 2023.11.21 |

| [ML수업] 8주차 실습5: feature engineering- Dimensionality Reduction (차원 축소) (0) | 2023.11.21 |

| [ML수업] 8주차 실습4: feature engineering- Feature Selection (0) | 2023.11.21 |

| [ML수업] 8주차 실습3: feature engineering- Numerical Feature Transformation (수치형) (0) | 2023.11.21 |